I have been asked to give an opening keynote at the Cyber Security Forum in Pescara this month, and the questions posed by the organisers go straight to the heart of complex and debated issues. We are talking about the so-called quantum threat.

Wired Italia publishes the Italian version of this article.

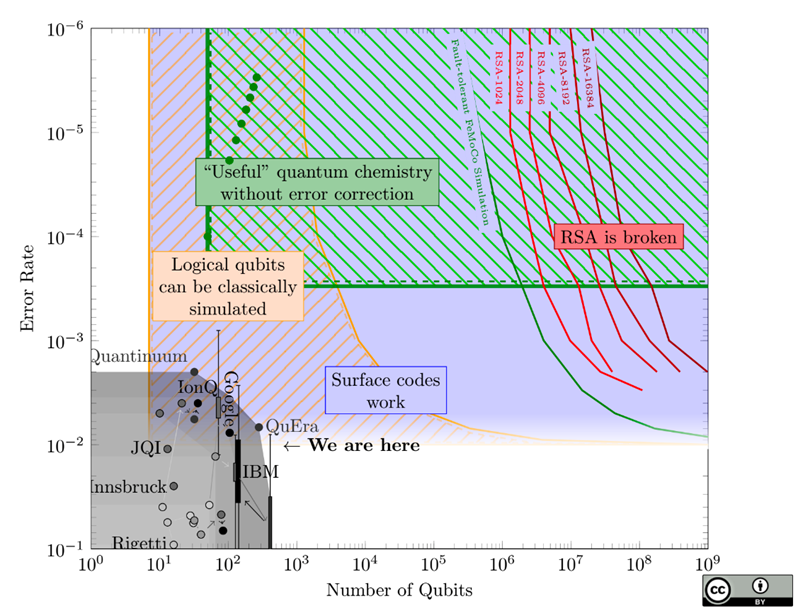

Quantum computing capacity is developing slowly but inexorably within illustrious companies such as IBM, Google, Nvidia and Microsoft, but also small companies specialising only in this sector like QuEra, IonQ, Rigetti and Quantinuum, or academic research institutes like the University of Innsbruck or the Joint Quantum Institute in the USA. The interest of these organisations is not to break existing algorithms, but to make possible calculations once unthinkable that could revolutionise many research fields, starting with chemistry and physics.

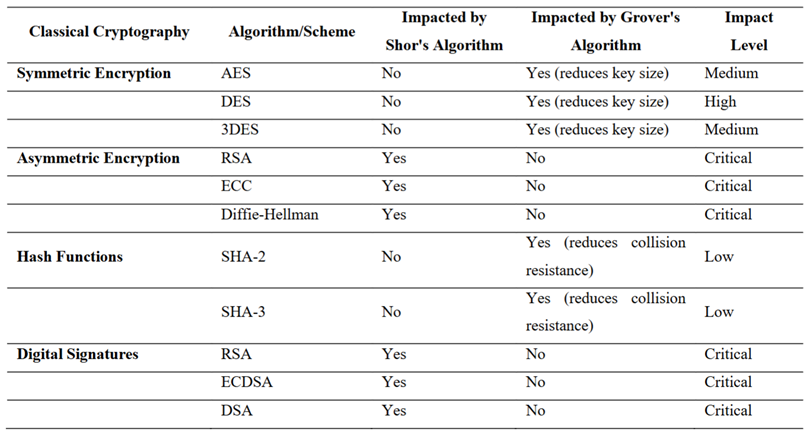

Meanwhile, in the field of cryptography, and therefore also computer security, this qualitatively innovative computing capacity will bring with it the danger of devastating global implications for authentication and access control to data, as demonstrated by Shor's (1994) and Grover's (1996) algorithms, which three decades ago already theorised the inevitable quantum vulnerability of the cryptographic algorithms indicated in this table:

Like all eschatologies, this prophecy, let's call it Q-Day, polarises those who, on one hand, paint it as the imminent digital apocalypse, and on the other those who consider it a problem so futuristic that it can be safely ignored. The reality, as usual, is more complex and impossible to summarise by taking maximalist positions.

The risk that a quantum computer could, today, decrypt en masse communications protected by algorithms such as RSA or ECC is nil. Nothing of the sort exists for the signatures that seal digital documents and contracts. However, the question about the concreteness of the risk today cannot be dismissed with a simple "not yet".

The real current risk, often underestimated, is that of the so-called "harvest now, decrypt later".

This means that data encrypted today with algorithms vulnerable to quantum computing can be intercepted, archived and patiently preserved until a sufficiently powerful quantum computer can decrypt them. This makes vulnerable, with retroactive effect, all information that needs to remain confidential for a period extending beyond the presumed appearance of such machines. Think of state secrets, sensitive address books and concessions.

This scenario alone should make us reflect on the actual urgency of the issue, beyond predictions about the arrival of the fateful Q-Day, and well justifies the alarm already sounded last year by the Italian National Security Agency about this future threat, or the fact that the US National Institute of Standards and Technology (NIST), a reference in the sector, has set indicative deadlines for the transition, suggesting isolating vulnerable algorithms by 2030 and removing them by 2035.

With this article, I try to clarify a bit, or at least expose the situation as I see it, without much beating around the bush, answering three questions for my opening speech at the Pescara conference. I aim to provide a concise and pragmatic perspective, based on known facts and a healthy dose of scepticism towards easy enthusiasm or unjustified alarmism.

Here are the questions posed to me by the scientific director of the event, Francesco Perna, which I will attempt to answer:

- How concrete is the risk today that quantum computers could compromise current cryptography systems, and what timeframes should we prepare for?

- What are the main obstacles to adopting post-quantum cryptography, especially in public and infrastructural contexts?

- From your point of view, will the transition to post-quantum cryptography be gradual, or will we see moments of strong discontinuity in system security?

1: Risks and Timeframes

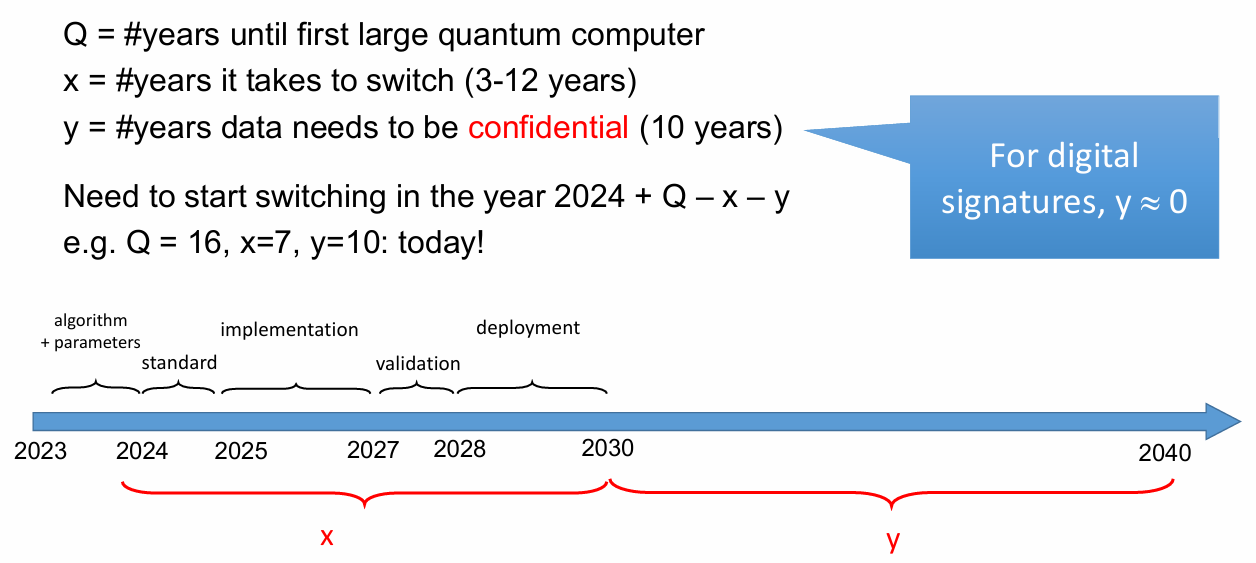

As I wrote already, the risk is real, but not immediate. Authoritative but rather approximate estimates indicate that we could see quantum computers within five to fifteen years. Feel free to choose whom to believe, and let's denote this value with a variable Q.

From this estimate, we must subtract the years during which signatures or credentials, such as driving licences and identity cards, must not be falsifiable or the years during which access to confidential data, such as industrial secrets, must remain regulated; let's optimistically say ten years. It's up to you, anyway. This is the variable Y.

Finally, we must subtract the years your organisation needs to update all codes, procedures, and possibly the IT architecture to add protections resistant to quantum computation. Let's say you need three to twelve years, depending on the size and complexity of your systems, the readiness and availability of your IT department, and many other specific factors that I will not list here. This is the variable X to which I will give a blanket value of five.

So let's do a simple equation:

Q=15, Y=10, X=5

2025 + Q - X - Y = 2025

The time to start is today!

According to this simple exercise suggested by professor Bart Preneel the time to start adopting cryptographic defences resistant to quantum attacks is... today. Some other experts remain on average pessimistic, for example, Michele Mosca of the Canadian QIC hypothesises that we are already 2 years late (Q=30, Y=12, X=20).

2: Obstacles

The challenge is not solely technical. Bureaucratic inertia, system obsolescence and the complexity of large-scale updates create significant barriers. These factors could dangerously delay migration in critical and often too rigid sectors such as public infrastructure.

Furthermore, update times are already improbable for suppliers of systems that entrust their security model to hardware (such as HSM, TEE/SE, etc.). One can always hope that some genius will produce retro-compatible algorithms, as Matteo Frigo is doing with longfellow-zk, or one can limit oneself to using only the functions that are already present in hardware for the realisation of new signature algorithms, such as FIPS 205 (SLH-DSA), just recently standardised.

In any case, the more complex the situations, the more specific the solution becomes. The obstacle is therefore the time and attention to be devoted to solutions that should not be applied in the typical emergency mode to which some managers are sadly accustomed. Proceeding by emergency always generates so much stress at work for everyone, which, let's not forget, is an obstacle capable of generating many others.

3: Transition

The more time passes, the greater the risk and the less smooth the transition. Having time also means being able to afford delays, and those who do not move in advance will feel increasingly under threat, which is not a good thing... even if in the security field, some are used to it.

I even believe that the transition could occur with a decisive rupture due to the logarithmic progression that development will assume after a certain point, and on this, Sam Jaques more or less agrees, having drawn this explanatory graph:

In this graph, the blue region indicates where physical qubits are good enough to create logical qubits. The striped yellow region shows where, despite not being able to simulate all physical qubits, the few logical qubits obtained still allow classical simulation of correct algorithms. The green and red lines indicate the requirements (number of logical qubits and error rates of physical gates) for practical applications such as molecule simulation or more difficult applications like breaking RSA.

We are in a phase where research is still exploring different qubit technologies and approaches to error correction, such as the "surface code", a recent innovation on which the production of dedicated chips is based. And I believe that, also by Moore's law, the time it takes us to reach utility in the scientific field (the green region) is longer than it will take us to get from there to the red lines.

In other words, I believe that as soon as quantum computation provides practical advantages to industry, the evolution of quantum computation technologies will accelerate exponentially.

About Me

My name is Denis Roio, also known as Jaromil. I am a hacker at the origins of the Dyne.org foundation.

My analysis is based on consolidated experience, including over a decade of leading development projects for the European Commission.

I am co-founder of the Italian Cryptography Society "De Componendis Cifris" and the Metro Olografix association in Pescara, co-chair of the W3C SING security interest group, and work as scientific director for The Forkbomb Company.